Last edited by @suen 2024-10-15T23:16:31Z

冇語言文字自然冇現在的GPT,但不脫離語言文字,AGI也一定隔了至少兩層。這位和李飛飛已經在擺脫語言文字的路上了⋯⋯

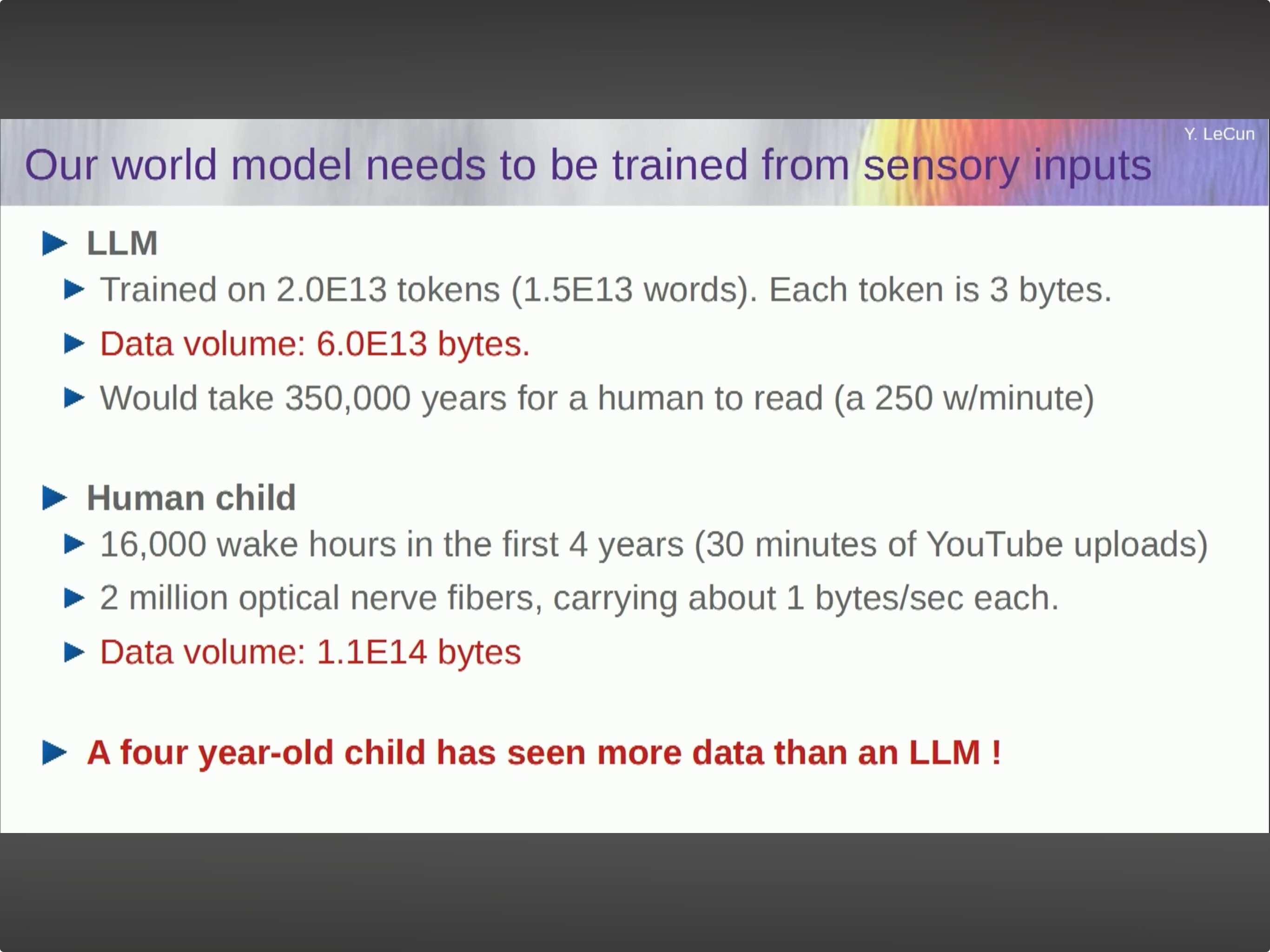

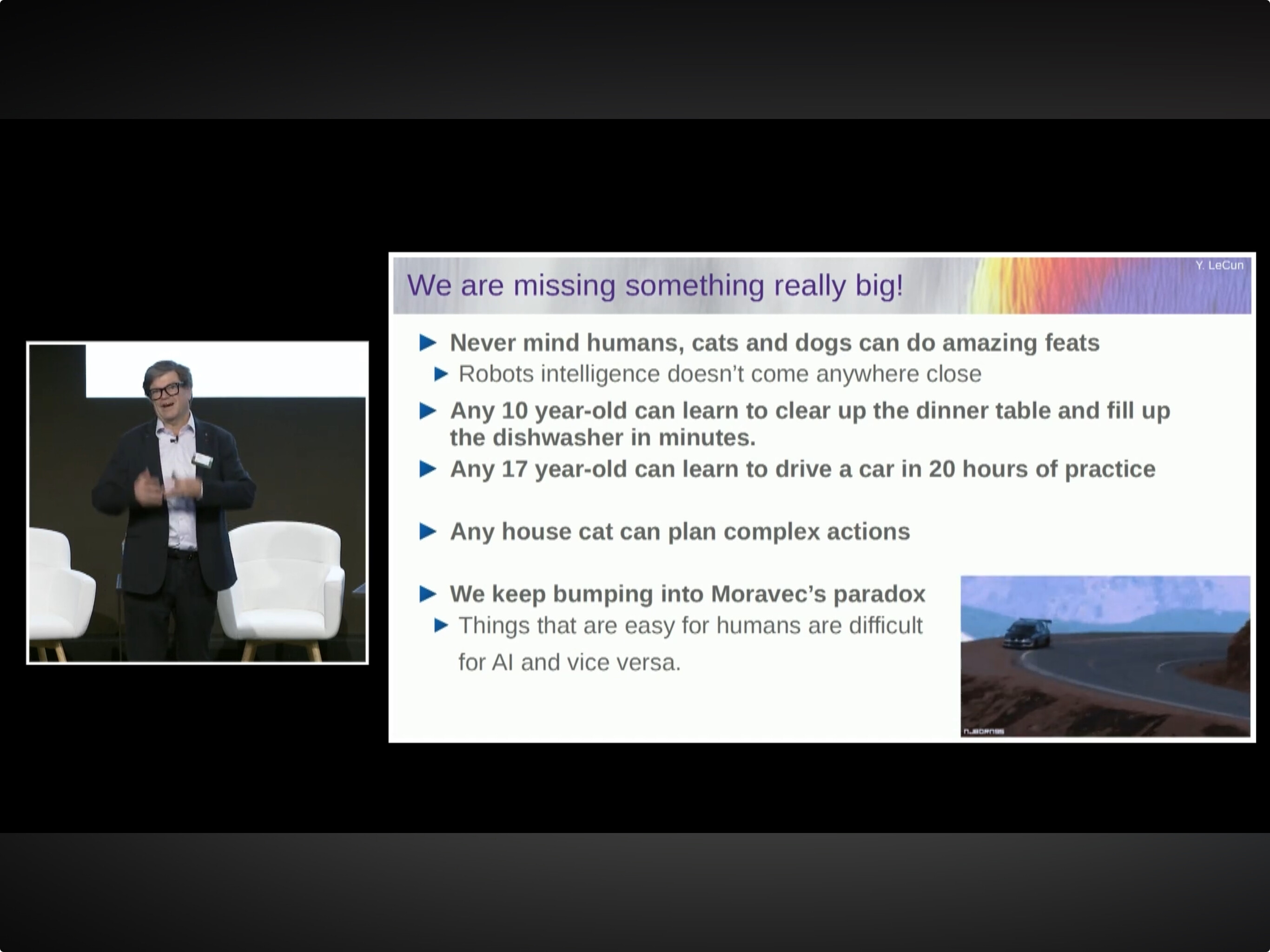

說到底,語言和文字是有邊界的,眼耳鼻舌身意,說的聽的,甚至可能從來不是最好的描述世界的工具,World Model 才是更終極點的優選。

設想所有黑鏡可以實時連結,所有CPU/GPU可以基於一個世界模型運算,人類就有了天眼和下一個文明等級。因為看到這一層,所以Yann LeCun才拼命鄙夷現有模型和研發路數給出HLAI說法,同時無一點猶疑的推進開源,Meta才會給不能記錄的人眼一副可以一直戴著從而記錄一切所見的眼鏡⋯⋯ pic.twitter.com/UhcVqH803c

— suen (@ieduer) October 16, 2024

黑镜那本书叫啥来着😭

哇哦